Key Takeaways:

Introduction

Application performance is a mission-critical concern in today’s hyper-connected, digital-first economy. For organizations seeking to support evolving user expectations and dynamic market demands, even a single instance of downtime or sluggishness can result in lost revenue and eroded trust. Staying ahead means not just reacting to issues as they surface, but proactively building resilience and speed into every layer of your app infrastructure. Solutions like eG Innovations have empowered businesses to continuously optimize their ecosystems, setting a new standard for what users expect from digital services.

Proactive optimization is not a one-time project, but an ongoing commitment to visibility, agility, and continuous improvement. By integrating early-warning systems and flexible architectures, organizations are better positioned to deploy, monitor, and optimize features at scale. These practices also allow for distinctive, seamless experiences that attract and retain customers in a crowded digital market.

Leadership teams are increasingly investing in scalable, cloud-native architectures and data-driven processes, recognizing that speed and reliability directly impact brand reputation. The right strategies can determine how effectively an organization prevents bottlenecks, predicts performance incidents, and aligns technical operations with evolving business needs.

Adopting these best practices also drives measurable business impact, from faster innovation cycles to higher customer satisfaction scores and increased operational efficiency. With application performance now firmly tied to business outcomes, leveraging proactive strategies is vital for anyone seeking a durable edge.

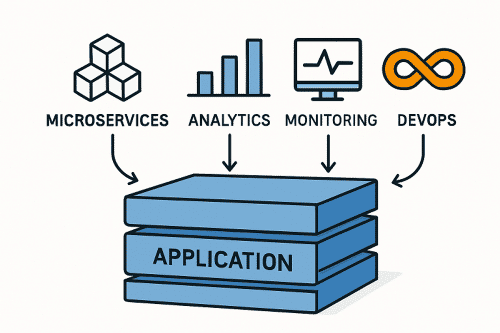

The transition away from monolithic systems to microservices has become foundational for companies aiming to build adaptable, future-ready digital platforms. Microservices architecture involves breaking down massive apps into independently deployable components, each responsible for specific tasks. This modularity enables rapid iteration, minimizes disruptions during updates, and optimizes resource allocation across cloud environments. Companies that shifted to microservices saw a 20-30% improvement in development speed and time-to-market, a crucial metric in industries where responsiveness is a competitive currency. Furthermore, teams can scale high-traffic sections independently without overprovisioning or risking interdependencies that cascade into outages.

Implement Real-Time Analytics

Staying ahead of performance issues requires visibility that goes beyond historical reports. Real-time analytics platforms ingest and process live operational data, granting developers and operations teams immediate insight into latency spikes, throughput dips, or user behavior anomalies. This enables rapid, evidence-based decision-making and course correction before minor glitches escalate into major incidents.

Real-time monitoring provides a holistic, up-to-the-minute understanding of app health and availability. When paired with machine learning algorithms, teams can not only see what’s happening but also anticipate trends and automate responses to predictable threats. Solutions featuring powerful dashboards and custom alerting ensure the right people are notified instantly, facilitating near-continuous improvement.

Utilize Synthetic Monitoring

Unlike traditional monitoring tools, which primarily rely on passive data collection methods, synthetic monitoring utilizes carefully scripted transactions designed to simulate real end-user interactions across a diverse range of locations and various types of devices. This proactive approach allows organizations to identify and address issues, such as slow load times, intermittent glitches, or unpredictable downtime, before these problems can affect actual users. Continuous synthetic testing ensures that service levels remain consistent and reliable, even during periods of rapid scaling, high traffic, or complex deployment cycles that might otherwise introduce instability.

By replicating key user journeys around the clock, organizations obtain actionable intelligence and insights that can be effectively correlated with real-time production data and crucial business KPIs. The strategic combination of synthetic monitoring and real-user monitoring creates a comprehensive monitoring framework, especially effective in troubleshooting and analyzing performance within complex, distributed ecosystems. This combined approach provides both broad visibility and detailed depth of insight, enabling teams to proactively maintain optimal service quality and user satisfaction.

Conduct Regular Load Testing

In a world where usage spikes can happen without warning, regular load testing forms the cornerstone of resilient application infrastructure. Tools such as k6, Gatling, and Artillery simulate extreme conditions, pushing applications to their limits to reveal performance bottlenecks, memory leaks, or poor scaling under high demand. These tests should model realistic traffic scenarios, including peak usage, geographic dispersal, and concurrent session volumes, to inform capacity planning. Robust load testing practices not only verify current infrastructure but also inform future investments and cloud spending strategies. According to a Gartner report, regular load assessments reduce the risk of unplanned outages and ensure digital environments can flex efficiently alongside organizational growth.

Embrace DevOps Practices

The adoption of DevOps methodologies unites developers and operations teams, fostering better collaboration and communication. This integration effectively breaks down the traditional silos that often slow down the development process, leading to delays and increased defect rates. By aligning teams around shared objectives and goals, and by automating workflows through various tools and scripts, organizations can deliver new features and updates more quickly and efficiently. This also enables them to address bugs, security vulnerabilities, or other issues as they arise, rather than after deployment. The DevOps mindset fosters a culture of ownership, responsibility, and accountability among team members, all of which are critical components for maintaining high standards of reliability, stability, and overall software system performance. Continuous integration and continuous delivery (CI/CD) pipelines, along with infrastructure as code (IaC) and comprehensive automated testing frameworks, further accelerate the feedback loops within development workflows. These practices enable teams to identify and correct issues much earlier in the development lifecycle, thereby reducing costly rework and enhancing overall quality. Over time, adopting these habits and practices yields higher deployment velocity, lower error rates, and a significantly improved user experience, ultimately leading to more satisfied customers and stakeholders.

Conclusion

Proactive strategies, including the implementation of microservices and real-time analytics, as well as leveraging synthetic monitoring, routine load testing, and adopting DevOps practices, are increasingly recognized as defining the new gold standard in application performance management. As digital experiences become central to nearly every business model and customer interaction, organizations that effectively leverage these advanced practices are better positioned to deliver unparalleled speed, stability, and innovation. With robust, predictive methodologies in place—such as continuous monitoring, proactive issue resolution, and performance optimization- companies can confidently exceed user expectations, enhance customer satisfaction, and drive sustained, long-term business growth in a highly competitive digital landscape.

This post has been published by the admin of our website, responsible for content management, quality checks, and providing valuable information to our users.